撞不起

路口,一大众和宝马7系在等红灯,对面一捷达抽疯似的直直冲过来,看着就要撞到7系了,一个转向要去撞大众,大喊一声卧槽辉腾!猛打方向盘撞翻旁边一小板车。捷达车主马上下车赔笑:“丫俩撞不起啊,只好撞您板车了,老大爷,呵呵”低头看到散落一地的切糕….

路口,一大众和宝马7系在等红灯,对面一捷达抽疯似的直直冲过来,看着就要撞到7系了,一个转向要去撞大众,大喊一声卧槽辉腾!猛打方向盘撞翻旁边一小板车。捷达车主马上下车赔笑:“丫俩撞不起啊,只好撞您板车了,老大爷,呵呵”低头看到散落一地的切糕….

2012年9月28日,中华人民共和国住房和城乡建设部下发《关于开通12329住房公积金热线的通知》,这也苦了我们搞软件开发的程序猿们,在这里简单谈谈12329热线的建设方案。

电话热线平台其核心无非是语音自助查询和人工电话接听了,目前各地市基本上都有网上查询系统,也有一部分有自己的语音热线平台,如何充分利用现有资源实现和满足通知要求,值得思考。

图片源自:12329住房公积金热线服务导则

在满足《12329住房公积金热线服务导则》要求的原则下,充分利用住房公积金管理中心现有资源,结合12345等其他热线平台,实现12329热线功能。

即:

对于本市12345热线平台和原公积金热线都是我们公司做的话,做整合是比较理想的方案,但这里有一些问题需解决:

1)两个热线运销商不一,需协调选择最优方案;

2)12345和公积金分属不同部门单位,需地市政府协调资源;

3)从12329转出12345需要知道来源直接接入坐席,则12329需保持通话,运营商是收费的;

4)在12345热线不能判断公众是选择个人业务还是单位业务;

等等。

自建一套全新的语音平台,我公司与语音设备长期合作,已配套开发出热线业务处理平台,短信、电话、微博、传真、三方通话等等,IVR流程开发、公积金查询系统接口开发、订制功能开发等不在话下。

1、VMware Workstation Build 9.0.1 + 序列号生成器

磁力链:下载地址1

2、Mac OS X Mountain Lion 10.8.2 build 12C60 原版

磁力链: 下载地址1

3、7z 解压软件

点击打开:下载地址1

4、unlock-all-v110 VMware9 补丁

点击打开:CSDN下载页面

5、UltraISO 9.5.3

点击打开:中关村下载页面

1、下载好Mac OS X安装镜像文件 InstallESD.dmg,使用7z打开,找到子文件夹下面的 installesd.dmg解压出来,再使用 UltraISO 转换格式为 iso 文件。

2、使用序列号生成器生成序列号,安装好VMware9,解压VMware9补丁,找到 unlock-all-v110\windows 下面的install.cmd双击执行。

1、相信大家都使用过VMware,怎么用就不再赘述(不晓得的可以百度或google o(∩_∩)o )

安装补丁后就可以看到 Apple Mac OS X选项。

2、接下来就是配置虚拟机,找到iso文件,启动虚拟机下一步、下一步、下一步……

详细教程可以移步这里:http://bbs.pcbeta.com/viewthread-1130227-1-1.html

3、VMware Tools 安装,配置虚拟机光驱选择 unlock-all-v110\tools 下面的文件 darwin.iso,在mac系统下找到光驱执行安装即可。

安装成功后,mac 支持HD4000、可以全屏、设置分辨率。

1、系统安装成功登陆系统后,在mac系统下访问:

https://developer.apple.com/xcode/

点击页面上的 View Downloads 链接,输入你的Apple帐号密码即可下载。

2、Xcode 4.5.2 变化很大,以前老的教程已经不适用,再也找不到 View-based Application 和 Window-based Application里,这里推荐大家去买个新教程吧,我买了个但为了避免广告就不帖地址了。质量如何还不知道,还没发给我。- –

到Chrome应用商店,扩展程序中搜索“英雄联盟”,即可看到英雄联盟游戏记录查看器,安装即可。

或者点击:https://chrome.google.com/webstore/detail/%E8%8B%B1%E9%9B%84%E8%81%94%E7%9B%9F%E6%B8%B8%E6%88%8F%E8%AE%B0%E5%BD%95/ndmclmhaiggkljkppdjalddcljjhihbg?hl=zh-CN

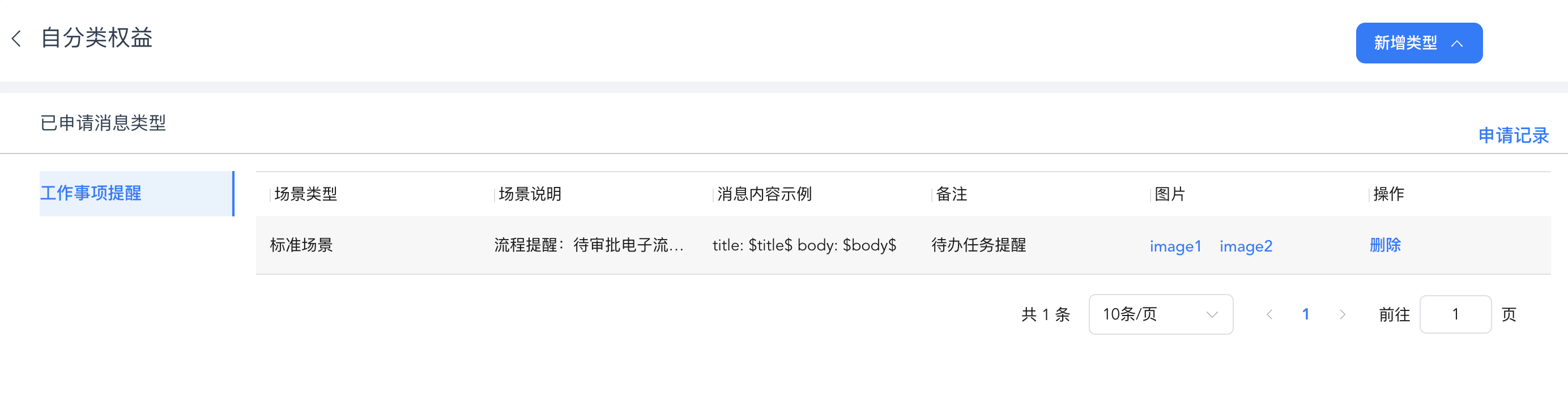

执行效果如下:

Firefox插件分为两种,即extension和plugin,网上搜索到的示例多是 XUL应用程序开发,XUL插件安装后需要重启浏览器才能使用,而这篇文章主要介绍如何使用 Add-on 来开发JS+HTML+CSS 应用,如Chrome插件可直接安装使用。

(感兴趣的朋友可以查看本站上一篇文章:chrome插件开发说明,对比一下你会发现chrome插件开发简直太easy – -)

言归正传,Add-on 提供SDK下载和在线开发两种方式,访问 https://builder.addons.mozilla.org/ 通过简单的注册,点击“Create an Add-on Now”,自动生成一个带main.js 的项目,如下图所示,你可以在Data文件夹下上传编辑自己的文件(若上传文件后左侧半天不出来按下F5即可):

Add-on 启动函数为 main.js,这里可以通过 require 申请一些资源权限,而放置在Data用户文件夹里的JS是不能直接使用 require 的,可能是Add-on 框架设计本身就不支持随意的调用系统资源吧,这个时候就得利用 port.on 和 port.emit 来传递变量和执行方法。

网络请求的示例可以访问:https://builder.addons.mozilla.org/package/89576/ 来查看,里面具体介绍了 port.on 和 port.emit 的使用、panel的生成、jQuery的引入、网络请求等,这个例子非常好。

通过查看其他网友分享的代码学习,地址:https://builder.addons.mozilla.org/search/ 输入关键词,查找你关心想实现的功能吧。

具体功能开发不在累述,我写的这个简单查询功能的插件在商城里搜索“合肥公积金查询”即可下载,下载后若你是win7系统,则:

C:\Users\Wizzer\AppData\Roaming\Mozilla\Firefox\Profiles\zah0wctd.default\extensions

找到 wizzer.cn@gmail.com.xpi 解压即可,源代码没加密,给大家做学习参考之用。

其他:

打包:在根文件夹选择所有文件,压缩成zip文件,改后缀名为 xpi。

安装:把xpi文件拖到firefox浏览器中即可。

调试:ctrl—shfit—j 调试插件。

扩展组件在线安装地址:

https://addons.mozilla.org/zh-CN/firefox/addon/%E5%90%88%E8%82%A5%E5%85%AC%E7%A7%AF%E9%87%91%E6%9F%A5%E8%AF%A2/

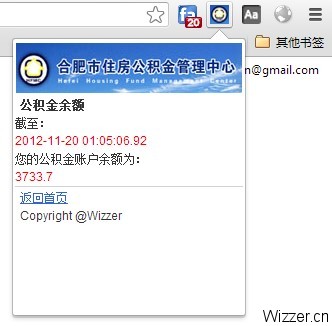

Chrome插件开发极其简单,只要会JS+HTML+CSS即可,当然我捣鼓的是简单的应用作为学习和验证之用,没有更多的深入。

本示例采用 jQuery 实现用户登录查询公积金余额功能,接口提供的功能较多但作为学习么,只开发了一个查询余额的功能。

2、编辑manifest.json文件,填写应用信息、访问权限等:

{

"name": "合肥公积金查询",

"version": "2012.11.20.0",

"manifest_version": 2,

"description": "这是一款合肥市住房公积金查询工具,用于学习测试仅提供余额查询。@Wizzer",

"icons":{"16":"16.png","48":"48.png"},

"content_scripts":[{

"js": [ "scripts/lib/jquery-1.7.2.min.js","scripts/main.js"],

"matches": [ "http://*/*", "https://*/*" ]

}],

"browser_action": {

"default_icon": "16.png",

"default_popup": "index.html"

},

"homepage_url":"",

"permissions": [ "cookies", "tabs", "http://*/*", "https://*/*" ]

}

注意事项:

A、目前最新版的chrome要求设置 “manifest_version”: 2 ;

B、permissions 配置了cookies权限,因为在应用中加入了记住密码功能;

C、API要求JS不可以内联,就是不能直接在页面元素上写JS,例如点击按钮alert提示都不会执行的;

var url = "/chrome";

var key = "接口APP_KEY隐藏";

var zgyhzh="";

var dwyhzh="";

var uptime="";

var hm="";

var mm="";

if (!chrome.cookies) {

chrome.cookies = chrome.experimental.cookies;

}

function delCookie(name) {

chrome.cookies.remove({"name": name,"url":url});

}

function setCookie(name,value) {

chrome.cookies.set({"name": name,"value":value,"url":url ,"expirationDate":1392000000});

}

function initCookie() {

var str="";

chrome.cookies.get({"name": "hm","url":url },function(cookie){

str=cookie.value;

if(""!=str){

$('#savehm').attr("checked",true);

$('#hm').val(Base64.decode(str));

}

});

chrome.cookies.get({"name": "mm","url":url },function(cookie){

str=cookie.value;

if(""!=str){

$('#savemm').attr("checked",true);

$('#mm').val(Base64.decode(str));

}

});

}

function login(){

hm=Base64.encode($('#hm').val());

mm=Base64.encode($('#mm').val());

$.ajax({

url : "http://220.178.98.86/hfgjj/service/login.jsp",

data : {"hm":hm,"mm":mm,"app_key":key} ,

success : function (res) {

loginData(res);

return false;

},

fail : function(res) {

loginData(res);

}

});

}

function loginData(res){

if(res.indexOf("error")>=0){

var obj = jQuery.parseJSON(res);

if(""!=obj.tip){

$("#tip").html("

"+obj.tip+"");

}

}else if(res.indexOf("more")>=0){

var obj = jQuery.parseJSON(res);

if("false"==obj.more){

zgyhzh=obj.zgyhzh;

dwyhzh=obj.dwyhzh;

uptime=obj.uptime;

oneData();

}else if("true"==obj.more){

uptime=obj.uptime;

var zgyhzhlist=obj.zgyhzhlist;

$.each(zgyhzhlist,function(entryIndex,entry){

if("true"==entry.zt){

zgyhzh=entry.zgyhzh;

dwyhzh=entry.dwyhzh;

oneData();

$("#note")[0].style.display='block';

return;

}

});

}

}else{

$("#tip").html("

"+res+"");

}

}

function oneData(){

var z=Base64.encode(zgyhzh);

var d=Base64.encode(dwyhzh);

$.ajax({

url : "http://220.178.98.86/hfgjj/service/grindex.jsp",

data : {"hm":hm,"mm":mm,"app_key":key,"zgyhzh":z,"dwyhzh":d} ,

success : function (res) {

showData(res);

return false;

},

fail : function(res) {

}

});

}

function showData(res){

if(res.indexOf("error")>=0){

var obj = jQuery.parseJSON(res);

if(""!=obj.tip){

$("#tip").html("

"+obj.tip+"");

}

}else{

$("#T1")[0].style.display='none';

$("#T2")[0].style.display='block';

var obj = jQuery.parseJSON(res);

var scje=obj.scje;

$("#uptime").html(""+uptime+"");

$("#yue").html(""+scje+"");

}

}

function init(){

initCookie();

$('#bt').click(function() {

if($('#savemm').attr('checked')){

setCookie("mm",Base64.encode($('#mm').val()));

setCookie("hm",Base64.encode($('#hm').val()));

}else if($('#savehm').attr('checked')){

setCookie("mm","");

setCookie("hm",Base64.encode($('#hm').val()));

}else{

setCookie("mm","");

setCookie("hm","");

}

$("#tip").html("

Loading...");

login();

});

$('#savemm').click(function() {

if($('#savemm').attr('checked')){

$('#savehm').attr("checked",true);

}else{

$('#savehm').attr("checked",false);

}

});

}

document.addEventListener('DOMContentLoaded', function () {

init();

});

PS:搞开发的JS都能看懂,就不注释了。。。

合肥市住房公积金查询 BODY { PADDING-BOTTOM: 0px; LINE-HEIGHT: 1.5em; MARGIN: 0px; PADDING-LEFT: 0px; PADDING-RIGHT: 0px; WORD-WRAP: break-word; FONT-SIZE: 12px; WORD-BREAK: break-all; PADDING-TOP: 0px } DIV { PADDING-BOTTOM: 0px; LINE-HEIGHT: 1.5em; MARGIN: 0px; PADDING-LEFT: 0px; PADDING-RIGHT: 0px; WORD-WRAP: break-word; FONT-SIZE: 12px; WORD-BREAK: break-all; PADDING-TOP: 0px } P { PADDING-BOTTOM: 0px; LINE-HEIGHT: 1.5em; MARGIN: 0px; PADDING-LEFT: 0px; PADDING-RIGHT: 0px; WORD-WRAP: break-word; FONT-SIZE: 12px; WORD-BREAK: break-all; PADDING-TOP: 0px } EM { PADDING-BOTTOM: 0px; LINE-HEIGHT: 1.5em; MARGIN: 0px; PADDING-LEFT: 0px; PADDING-RIGHT: 0px; WORD-WRAP: break-word; FONT-SIZE: 12px; WORD-BREAK: break-all; PADDING-TOP: 0px } SPAN { PADDING-BOTTOM: 0px; LINE-HEIGHT: 1.5em; MARGIN: 0px; PADDING-LEFT: 0px; PADDING-RIGHT: 0px; WORD-WRAP: break-word; FONT-SIZE: 12px; WORD-BREAK: break-all; PADDING-TOP: 0px } A { PADDING-BOTTOM: 0px; LINE-HEIGHT: 1.5em; MARGIN: 0px; PADDING-LEFT: 0px; PADDING-RIGHT: 0px; WORD-WRAP: break-word; FONT-SIZE: 12px; WORD-BREAK: break-all; PADDING-TOP: 0px } TD { PADDING-BOTTOM: 0px; LINE-HEIGHT: 1.5em; MARGIN: 0px; PADDING-LEFT: 0px; PADDING-RIGHT: 0px; WORD-WRAP: break-word; FONT-SIZE: 12px; WORD-BREAK: break-all; PADDING-TOP: 0px } FORM { PADDING-BOTTOM: 0px; LINE-HEIGHT: 1.5em; MARGIN: 0px; PADDING-LEFT: 0px; PADDING-RIGHT: 0px; WORD-WRAP: break-word; FONT-SIZE: 12px; WORD-BREAK: break-all; PADDING-TOP: 0px } BUTTON { PADDING-BOTTOM: 0px; LINE-HEIGHT: 1.5em; MARGIN: 0px; PADDING-LEFT: 0px; PADDING-RIGHT: 0px; WORD-WRAP: break-word; FONT-SIZE: 12px; WORD-BREAK: break-all; PADDING-TOP: 0px } body {COLOR: #333 ;min-width: 220px; margin: 0; font: 12px "Helvetica Neue", Helvetica, Arial, sans-serif; width: auto} EM { FONT-WEIGHT: bold } STRONG { FONT-WEIGHT: bold } DEL { TEXT-DECORATION: line-through } INPUT { MARGIN: 2px 0px; FONT-SIZE: 12px } SELECT { MARGIN: 2px 0px; FONT-SIZE: 12px } IMG { BORDER-BOTTOM: medium none; BORDER-LEFT: medium none; BORDER-TOP: medium none; BORDER-RIGHT: medium none } HR { BORDER-BOTTOM: 0px; BORDER-LEFT: 0px; HEIGHT: 0px; CLEAR: both; BORDER-TOP: #ddd 1px solid; BORDER-RIGHT: 0px } A:link { COLOR: #36c; TEXT-DECORATION: underline } A:visited { COLOR: #36c; TEXT-DECORATION: underline } A.im { COLOR: #f60 } .im { COLOR: #f60 } .imp { COLOR: #f00 } .gp { COLOR: #06c } .mp { COLOR: #f90 } .module { PADDING-BOTTOM: 0px; PADDING-LEFT: 5px; PADDING-RIGHT: 5px; PADDING-TOP: 0px } .wrapper { } .none { DISPLAY: none } .header { BORDER-BOTTOM: #d2d2d2 1px solid; PADDING-BOTTOM: 2px; PADDING-LEFT: 5px; PADDING-RIGHT: 5px; BACKGROUND: #f5f5f5; PADDING-TOP: 2px } .footer { PADDING-BOTTOM: 2px; PADDING-LEFT: 5px; PADDING-RIGHT: 5px; BORDER-TOP: #d2d2d2 1px solid; PADDING-TOP: 2px } .logo { PADDING-BOTTOM: 0px; PADDING-LEFT: 1px; PADDING-RIGHT: 1px; PADDING-TOP: 2px } .logo A { FONT-SIZE: 14px; TEXT-DECORATION: none } .nav { PADDING-BOTTOM: 5px; PADDING-LEFT: 5px; PADDING-RIGHT: 5px; PADDING-TOP: 5px } .cl { } .module { MARGIN-TOP: 3px } .gtt { TEXT-ALIGN: center } .capt { VERTICAL-ALIGN: middle } .err { BACKGROUND: #fc9 } .ptit { COLOR: #000; FONT-SIZE: 14px }

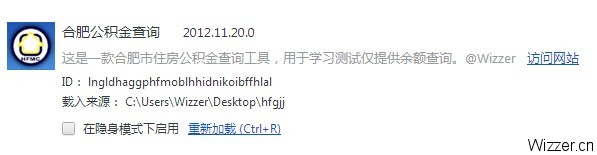

菜单–工具–扩展程序–载入正在开发的扩展程序,右上角会出现16*16的图标,o(∩_∩)o 哈哈

首次发布项目之前,您必须支付 US$5.00的一次性开发者注册费。我们收取此费用的目的是对开发者帐户进行验证,并为用户提供更好的保护,以防他们受到欺骗性活动的侵害。 支付注册费后,您就可以发布任意数量的项目,且无需再支付注册费了。

首先你需要有一张VISA信用卡,登陆google电子钱包注册信用卡。

https://www.google.com/checkout/ –注意要加https哦,你懂得

这里要注意的是,自动弹出的第一张信用卡注册界面不是如上的,很可能支付不成功,这个时候进入“支付方式”,选择修改信用卡,出现上面的界面选择New York 输入正确的邮编才能支付成功。

进入开发者中心(扩展程序最下面点击“获取更多扩展程序”,进入网上应用商城)

在开发者中心修改用户偏好设置,勾选“为我在 Chrome 网上应用店中的所有应用启用用户反馈功能。”,否则点击“立即支付注册费”会没反应(实在没辙换成IE试试吧)。

支付成功后耐心等待吧,我正在等待订单审核ing……

开发者中心点击添加新项目,把项目文件夹打包为zip压缩包上传即可。其他略,都能看懂。。

以上许多操作,你会发现一会这个网页打不开,那个网页等白天没反应,这个时候你就需要用chrome插件“SwitchySharp”了,具体怎么用百度一下吧,步骤也很多很麻烦,关键是耐心按教程来。

chrome里可以用 SwitchySharp,那么IE里要用怎么办,在IE里手动设置下代理即可,端口号到 SwitchySharp 选项里查看。

可移步:http://www.delver.net/?p=267 看教程~~

等订单审核完毕应用发布后,大家就可以在chrome 商城里搜索“合肥公积金查询”,那就是我写的这个应用了,如果你是win7系统的话,可以在:

C:\Users\Wizzer\AppData\Local\Google\Chrome\User Data\Default\Extensions

文件夹下看到源代码。over~~

几点思考,chrome插件是js+html+CSS的网页应用,不能直接执行JSP、ASP、PHP什么的,实现功能都需要调用chrome的接口、你自己应用提供的接口,那么可以不可以使用iframe嵌入自己的应用呢(- -)。

另外就是session传递的问题,从一个页面到另外一页面,信息怎么传递,用cookies感觉不太合适,也不能把所有的逻辑写在一个页面啊,这个需要深入的去研究。

再之就是js不能内联,那么实现一些功能就比较麻烦了,比如动态创建可点击的按钮,现在还没想到什么好办法,相信深入研究是可以解决的,JS要相当的熟才行啊,偶么就一般般。- –

零八年那会刚搞IT没多久,经验不太丰富,作为项目小组长选用了MySQL数据库,带着几个经验比我还不足的程序猿为Xx政府开发了一套信息发布系统。

至今后悔不已的事情莫过于选用MySql数据库、表结构设计不合理、程序猿水平低下没仔细检查他们的代码等,自零八年上线以后,用户单位从当初的80家左右逐渐增加到940家单位,用户总数近一千。

随着数据量的增长,系统前台经常打不开,而后台用户也录不了文章,部知道从什么时间开始问题越来越严重,自2011年以来出问题就重启tomcat,一直没有好的解决办法。

今年彻底检查了一番代码,居然发现有个程序猿写了一句话,在新增数据的时候把那张最大的表 select * 全部取出放到内存中去了!最气愤的是,他把数据放在内存变量之后,下面整行代码都没有使用到这个变量!(我就想,他是对我有多大仇恨阿,留下了这个隐患)

当然,找到那行最致命的代码并不能彻底解决一系列问题,根据这四年来的经验,整了个解决方案:

1、更换Mysql库为Oracle数据库;

组织研发了数据抽取工具,支持各类数据源通过配置可以实现数据迁移/转换等功能,将老系统的数据无缝迁移到新系统。

2、优化表结构进行横行/纵向分表;

纵向分表将大字段从主表中剔除,提高查询分页效率。

3、前后台分离前台使用lucene搜索引擎技术;

使用lucene,实现定时更新索引库(增量或覆盖更新不影响查询使用)。

lucene效率很高,前台页面不需要做静态化,对于前台主要是查询的系统静态化反而会降低系统性能。

4、统计定时任务根据发布状态进行更新;

增加状态表,有文章更新的单位重新统计数据,无更新的单位无需更新。

5、针对oracle数据库优化分页查询语句,只取当前页的数据。

换数据库真是个迫不得已的决定,经过三四个人的1个半月的努力,终于把新系统搞定,上个月新系统上线,现在前台打开是飞速啊。时常孤芳自赏,自己有事没事的就打开来看看,好吧,搞软件的好像都喜欢看自己的产品?。。

关于lucene、apache+tomcat相关文章可以在本站搜一下。当然,这个系统自从用了lucene已经解决了问题,并没有做负载均衡。

修改配置文件,按日期创建日志。

CustomLog “|D:/apache2/bin/rotatelogs.exe D:/apache2/logs/access%Y%m_%d.log 86400 480″ common

ErrorLog “|D:/apache2/bin/rotatelogs.exe D:/apache2/logs/error%Y%m_%d.log 86400 480″

90岁的IT男瘫软在床上,我说:“你起来吃口饭吧…” IT男说:“人老了,没胃口。” 我说:“楼下来了很多IT妹纸 …” IT男更加虚弱地回答道:“眼睛花了,看不清。” 这时,隔壁的程序猿跑过来说:“哎哟,你60年前写的代码,现在又跑出bug来!” IT男:“NND,快扶我起来!”

我是个程序猿,一天我坐在路边一边喝水一边苦苦检查bug。这时一个乞丐在我边上坐下了,开始要饭,我觉得可怜就给了他1块钱,然后接着调试程序。他可能生意不好,就无聊的看看我在干什么,然后过了一会,他幽幽的说,这里少了个分号。

从上学那会儿拥有自己第一台电脑,到现在买过1台台式电脑、1台笔记本、2台Thinkpad。

第一台电脑是组装机04年买的,配置是赛扬1.7GHz单核、80G硬盘、256M内存、128M显存、17寸液晶显示器,好吧,那厚笨的显示器–,那会儿买电脑似乎还不是太普遍的事情,在村子里也算少数的几家买电脑的吧。那年放暑假的,甚至坐长途汽车把主机和显示器带回家玩,拨号上网可贵了,在现在看来真难想象,哪那么大的玩瘾呢。

记得还玩过windows98系统,后来换了XP。玩的最多的游戏应该是CS和泡泡堂、冒险岛吧,再多的不记得了。后来毕业了,电脑搬回老家贱卖。5~

第二台电脑是联想笔记本,好像是第一款仿thinkpad的旭日410L,1.6GHz赛扬二代、内存加到512M、硬盘80G,XP系统。06年那会儿刚从实习单位出来找到家软件公司,工作尚未稳定从公司拿钱买了电脑,之后每个月从工资里扣钱。有了笔记本就方便许多了,随身携带,似乎上班下班回老家都不离身,这个习惯也延续至今。

第三台电脑是Thinkpad R400,P8700 双核双线程CPU、2G内存、250G硬盘,10年公司为了挽留我出了5K,自己又出了2K买的。410L给了三姐家的小外甥,这会儿不知道还能不能开机了。回来陆续把R400配置加到8G内存、500G硬盘+250G光驱位硬盘,CPU是无法升级了,技术日新月异,性能是跟不上时代了。用了两年多,USB口插坏修过,键盘鼠标也换了,前些日子交给朋友帮忙处理卖掉,不知道值几个钱。

对比Macbook的精贵,搞软件开发的感觉用起来太花哨,还是看中Thinkpad的沉稳商务。CPU都出来i7四核八线程了,流行起了固态硬盘,可是那价格动辄上万,虽然工资一年年涨可买了房子后感觉日子不好过啊,真心买不起行货。

时常去论坛淘宝等地方转悠,看中X230轻巧,新的巧克力键盘、背光键盘灯、指纹、IPS屏幕,十一后整体降了500¥,想想背着厚重的R400上班下班,一狠心分期在淘宝上买了水货。当然一定要找信誉好的商家,为避免广告就不贴地址了。加128G固态硬盘,幸而电脑预留了个mSata卡槽,不用换下原硬盘。

看看详细配置:

电脑型号 联想 ThinkPad X230 笔记本电脑 操作系统 Windows 7 旗舰版 64位 SP1 ( DirectX 11 ) 处理器 英特尔 第三代酷睿 i7-3520M @ 2.90GHz 双核四线程 主板 联想 2324B76 (英特尔 Ivy Bridge) 内存 8 GB ( 三星 DDR3 1600MHz ) 主硬盘 Crucial M4-CT128M4SSD3 ( 128 GB / 固态硬盘 ) 副硬盘 日立 HTS725050A7E630 (500GB / 7200 转) 显卡 英特尔 Ivy Bridge Graphics Controller ( 2112 MB / 联想 ) 显示器 联想 LEN40E2 ( 12.7 英寸 ) 声卡 瑞昱 ALC269 @ 英特尔 Panther Point High Definition Audio Controller 网卡 英特尔 82579LM Gigabit Network Connection / 联想

这下该心满意足了吧,可这样的配置并不是最好最高的,相信没过多久就会过时,而两年或数年之后再来回头看看这篇文章,相比更有一番感慨吧。LOL玩英雄联盟~

Tomcat6 : http://tomcat.apache.org/download-60.cgi

下载:apache-tomcat-6.0.36.exe

apache httpd server 2.2: http://www.fayea.com/apache-mirror//httpd/binaries/win32/

下载:httpd-2.2.22-win32-x86-no_ssl.msi

apache tomcat connector: http://archive.apache.org/dist/tomcat/tomcat-connectors/jk/binaries/win32/jk-1.2.31/

下载:mod_jk-1.2.31-httpd-2.2.3.so

安装路径:

E:\Apache2.2

E:\apache-tomcat-6.0.36-1

E:\apache-tomcat-6.0.36-2

项目路径:

E:\work\demo

修改 E:\Apache2.2\conf\httpd.conf 文件。

1)、加载外部配置文件:

文件最后一行加上

include conf/mod_jk.conf

2)配置项目路径:

Alias /demo "E:/work/demo"

ScriptAlias /cgi-bin/ "E:/Apache2.2/cgi-bin/"

3)配置目录权限:

Order Deny,Allow Allow from all

4)配置默认首页: 增加 index.jsp

DirectoryIndex index.jsp index.html

5)增加 E:\Apache2.2\conf\mod_jk.conf 文件内容:

LoadModule jk_module modules/mod_jk-1.2.31-httpd-2.2.3.so JkWorkersFile conf/workers.properties #指定那些请求交给tomcat处理,"controller"为在workers.propertise里指定的负载分配控制器名 JkMount /*.jsp controller

同时将 mod_jk-1.2.31-httpd-2.2.3.so 文件放入 E:\Apache2.2\modules 文件夹下。

6)增加 E:\Apache2.2\conf\workers.properties 文件内容:

#server worker.list = controller #========tomcat1======== worker.tomcat1.port=10009 worker.tomcat1.host=localhost worker.tomcat1.type=ajp13 worker.tomcat1.lbfactor = 1 #========tomcat2======== worker.tomcat2.port=11009 worker.tomcat2.host=localhost worker.tomcat2.type=ajp13 worker.tomcat2.lbfactor = 1 #========controller,负载均衡控制器======== worker.controller.type=lb worker.controller.balanced_workers=tomcat1,tomcat2 worker.controller.sticky_session=false worker.controller.sticky_session_force=1 #worker.controller.sticky_session=1

1)Tomcat-1 配置

E:\apache-tomcat-6.0.36-1\conf\server.xml

需要修改端口的地方:

1)Tomcat-2 配置

E:\apache-tomcat-6.0.36-2\conf\server.xml

需要修改端口的地方:

项目 web.xml 文件需在 下增加。

test.jsp

Cluster App Test

Server Info:

<%

out.println(request.getLocalAddr() + " : " + request.getLocalPort() + "

");

%>

<%

out.println("

ID " + session.getId() + "

");

// 如果有新的 Session 属性设置

String dataName = request.getParameter("dataName");

if (dataName != null && dataName.length() > 0) {

String dataValue = request.getParameter("dataValue");

session.setAttribute(dataName, dataValue);

}

out.println("Session 列表

");

System.out.println("============================");

Enumeration e = session.getAttributeNames();

while (e.hasMoreElements()) {

String name = (String) e.nextElement();

String value = session.getAttribute(name).toString();

out.println(name + " = " + value + "

");

System.out.println(name + " = " + value);

}

%>

名称:

值:

先启动Apache2服务,之后依次启动两个tomcat。 分别访问: http://127.0.0.1:10080/test.jsp http://127.0.0.1:11080/test.jsp http://127.0.0.1/test.jsp 接下来测试你懂得,三者 seesion 内容一致即配置成功。

1、若测试结果不成功,可以查看日志看看报什么错误,是否配置疏忽了什么环节,apache的权限有没有配置等,注意版本; 2、放在session里的对象需要序列化,即类 implements Serializable。

去姐姐家蹭饭蒸螃蟹,姐夫夹了一个给我,一个给四岁的外甥女。“爸爸你吃”。“爸爸不吃,留给小姨和宝宝吃”。小外甥女说“爸爸你不能这样,你要对自己好一点,你天天跟牛似的还不吃饭,你累死了,会有别的叔叔花你的钱,住你的房子,睡你的老婆,打你的宝宝的!吃!赶紧吃!”

街边,一对情侣在吵架。女孩对男孩说,“我们分手吧!”男孩沉默半天,开口问道,“我能再说最后一句话吗?”“说吧,婆婆妈妈的。”“我会编程……”“会编程有个屁用啊,现在到处都是会编程的人!”男孩涨红了脸,接着说道,“我会编程……我会变成……童话里,你爱的那个天使……”

下载lucene 3.6.1 : http://lucene.apache.org/

下载中文分词IK Analyzer: http://code.google.com/p/ik-analyzer/downloads/list (注意下载的是IK Analyzer 2012_u5_source.zip,其他版本有bug)

下载solr 3.6.1: http://lucene.apache.org/solr/(编译IK Analyzer时需引用包)

OK,将lucene 、solr 相关包(lucene-core-3.6.1.jar、lucene-highlighter-3.6.1.jar、lucene-analyzers-3.6.1.jar、apache-solr-core-3.6.1.jar、apache-solr-solrj-3.6.1.jar)拷贝到项目lib下,IK源码置于项目src下。

package lucene.util;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.store.Directory;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.util.Version;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.wltea.analyzer.lucene.IKAnalyzer;

import java.sql.Connection;

import java.io.File;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Date;

import modules.gk.Gk_info;

import modules.gk.Gk_infoSub;

import web.sys.Globals;

import web.db.DBConnector;

import web.db.ObjectCtl;

import web.util.StringUtil;

//Wizzer.cn

public class LuceneIndex {

IndexWriter writer = null;

FSDirectory dir = null;

boolean create = true;//是否初始化&覆盖索引库

public void init() {

long a1 = System.currentTimeMillis();

System.out.println("[Lucene 开始执行:" + new Date() + "]");

Connection con = DBConnector.getconecttion(); //取得一个数据库连接

try {

final File docDir = new File(Globals.SYS_COM_CONFIG.get("sys.index.path").toString());//E:\lucene

if (!docDir.exists()) {

docDir.mkdirs();

}

String cr = Globals.SYS_COM_CONFIG.get("sys.index.create").toString();//true or false

if ("false".equals(cr.toLowerCase())) {

create = false;

}

dir = FSDirectory.open(docDir);

// Analyzer analyzer = new StandardAnalyzer(Version.LUCENE_36);

Analyzer analyzer = new IKAnalyzer(true);

IndexWriterConfig iwc = new IndexWriterConfig(Version.LUCENE_36, analyzer);

if (create) {

// Create a new index in the directory, removing any

// previously indexed documents:

iwc.setOpenMode(IndexWriterConfig.OpenMode.CREATE);

} else {

// Add new documents to an existing index:

iwc.setOpenMode(IndexWriterConfig.OpenMode.CREATE_OR_APPEND);

}

writer = new IndexWriter(dir, iwc);

String sql = "SELECT indexno,title,describes,pdate,keywords FROM TABLEA WHERE STATE=1 AND SSTAG1 ";

int rowCount = ObjectCtl.getRowCount(con, sql);

int pageSize = StringUtil.StringToInt(Globals.SYS_COM_CONFIG.get("sys.index.size").toString()); //每页记录数

int pages = (rowCount - 1) / pageSize + 1; //计算总页数

ArrayList list = null;

Gk_infoSub gk = null;

for (int i = 1; i < pages+1; i++) {

long a = System.currentTimeMillis();

list = ObjectCtl.listPage(con, sql, i, pageSize, new Gk_infoSub());

for (int j = 0; j < list.size(); j++) {

gk = (Gk_infoSub) list.get(j);

Document doc = new Document();

doc.add(new Field("indexno", StringUtil.null2String(gk.getIndexno()), Field.Store.YES, Field.Index.NOT_ANALYZED_NO_NORMS));//主键不分词

doc.add(new Field("title", StringUtil.null2String(gk.getTitle()), Field.Store.YES, Field.Index.ANALYZED));

doc.add(new Field("describes", StringUtil.null2String(gk.getDescribes()), Field.Store.YES, Field.Index.ANALYZED));

doc.add(new Field("pdate", StringUtil.null2String(gk.getPdate()), Field.Store.YES, Field.Index.NOT_ANALYZED_NO_NORMS));//日期不分词

doc.add(new Field("keywords", StringUtil.null2String(gk.getKeywords()), Field.Store.YES, Field.Index.ANALYZED));

writer.addDocument(doc);

ObjectCtl.executeUpdateBySql(con,"UPDATE TABLEA SET SSTAG=1 WHERE indexno='"+gk.getIndexno()+"'");//更新已索引状态

}

long b = System.currentTimeMillis();

long c = b - a;

System.out.println("[Lucene " + rowCount + "条," + pages + "页,第" + i + "页花费时间:" + c + "毫秒]");

}

writer.commit();

} catch (Exception e) {

e.printStackTrace();

} finally {

DBConnector.freecon(con); //释放数据库连接

try {

if (writer != null) {

writer.close();

}

} catch (CorruptIndexException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

if (dir != null && IndexWriter.isLocked(dir)) {

IndexWriter.unlock(dir);//注意解锁

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

long b1 = System.currentTimeMillis();

long c1 = b1 - a1;

System.out.println("[Lucene 执行完毕,花费时间:" + c1 + "毫秒,完成时间:" + new Date() + "]");

}

}

package lucene.util;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.store.Directory;

import org.apache.lucene.search.*;

import org.apache.lucene.search.highlight.SimpleHTMLFormatter;

import org.apache.lucene.search.highlight.Highlighter;

import org.apache.lucene.search.highlight.SimpleFragmenter;

import org.apache.lucene.search.highlight.QueryScorer;

import org.apache.lucene.queryParser.QueryParser;

import org.apache.lucene.queryParser.MultiFieldQueryParser;

import org.apache.lucene.analysis.TokenStream;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.KeywordAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.Term;

import org.apache.lucene.util.Version;

import modules.gk.Gk_infoSub;

import java.util.ArrayList;

import java.io.File;

import java.io.StringReader;

import java.lang.reflect.Constructor;

import web.util.StringUtil;

import web.sys.Globals;

import org.wltea.analyzer.lucene.IKAnalyzer;

//Wizzer.cn

public class LuceneQuery {

private static String indexPath;// 索引生成的目录

private int rowCount;// 记录数

private int pages;// 总页数

private int currentPage;// 当前页数

private int pageSize; //每页记录数

public LuceneQuery() {

this.indexPath = Globals.SYS_COM_CONFIG.get("sys.index.path").toString();

}

public int getRowCount() {

return rowCount;

}

public int getPages() {

return pages;

}

public int getPageSize() {

return pageSize;

}

public int getCurrentPage() {

return currentPage;

}

/**

* 函数功能:根据字段查询索引

*/

public ArrayList queryIndexTitle(String keyWord, int curpage, int pageSize) {

ArrayList list = new ArrayList();

try {

if (curpage <= 0) {

curpage = 1;

}

if (pageSize <= 0) {

pageSize = 20;

}

this.pageSize = pageSize; //每页记录数

this.currentPage = curpage; //当前页

int start = (curpage - 1) * pageSize;

Directory dir = FSDirectory.open(new File(indexPath));

IndexReader reader = IndexReader.open(dir);

IndexSearcher searcher = new IndexSearcher(reader);

Analyzer analyzer = new IKAnalyzer(true);

QueryParser queryParser = new QueryParser(Version.LUCENE_36, "title", analyzer);

queryParser.setDefaultOperator(QueryParser.AND_OPERATOR);

Query query = queryParser.parse(keyWord);

int hm = start + pageSize;

TopScoreDocCollector res = TopScoreDocCollector.create(hm, false);

searcher.search(query, res);

SimpleHTMLFormatter simpleHTMLFormatter = new SimpleHTMLFormatter("", "");

Highlighter highlighter = new Highlighter(simpleHTMLFormatter, new QueryScorer(query));

this.rowCount = res.getTotalHits();

this.pages = (rowCount - 1) / pageSize + 1; //计算总页数

TopDocs tds = res.topDocs(start, pageSize);

ScoreDoc[] sd = tds.scoreDocs;

for (int i = 0; i < sd.length; i++) {

Document hitDoc = reader.document(sd[i].doc);

list.add(createObj(hitDoc, analyzer, highlighter));

}

} catch (Exception e) {

e.printStackTrace();

}

return list;

}

/**

* 函数功能:根据字段查询索引

*/

public ArrayList queryIndexFields(String allkeyword, String onekeyword, String nokeyword, int curpage, int pageSize) {

ArrayList list = new ArrayList();

try {

if (curpage <= 0) {

curpage = 1;

}

if (pageSize <= 0) {

pageSize = 20;

}

this.pageSize = pageSize; //每页记录数

this.currentPage = curpage; //当前页

int start = (curpage - 1) * pageSize;

Directory dir = FSDirectory.open(new File(indexPath));

IndexReader reader = IndexReader.open(dir);

IndexSearcher searcher = new IndexSearcher(reader);

BooleanQuery bQuery = new BooleanQuery(); //组合查询

if (!"".equals(allkeyword)) {//包含全部关键词

KeywordAnalyzer analyzer = new KeywordAnalyzer();

BooleanClause.Occur[] flags = {BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD};//AND

Query query = MultiFieldQueryParser.parse(Version.LUCENE_36, allkeyword, new String[]{"title", "describes", "keywords"}, flags, analyzer);

bQuery.add(query, BooleanClause.Occur.MUST); //AND

}

if (!"".equals(onekeyword)) { //包含任意关键词

Analyzer analyzer = new IKAnalyzer(true);

BooleanClause.Occur[] flags = {BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD};//OR

Query query = MultiFieldQueryParser.parse(Version.LUCENE_36, onekeyword, new String[]{"title", "describes", "keywords"}, flags, analyzer);

bQuery.add(query, BooleanClause.Occur.MUST); //AND

}

if (!"".equals(nokeyword)) { //排除关键词

Analyzer analyzer = new IKAnalyzer(true);

BooleanClause.Occur[] flags = {BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD};//NOT

Query query = MultiFieldQueryParser.parse(Version.LUCENE_36, nokeyword, new String[]{"title", "describes", "keywords"}, flags, analyzer);

bQuery.add(query, BooleanClause.Occur.MUST_NOT); //AND

}

int hm = start + pageSize;

TopScoreDocCollector res = TopScoreDocCollector.create(hm, false);

searcher.search(bQuery, res);

SimpleHTMLFormatter simpleHTMLFormatter = new SimpleHTMLFormatter("", "");

Highlighter highlighter = new Highlighter(simpleHTMLFormatter, new QueryScorer(bQuery));

this.rowCount = res.getTotalHits();

this.pages = (rowCount - 1) / pageSize + 1; //计算总页数

System.out.println("rowCount:" + rowCount);

TopDocs tds = res.topDocs(start, pageSize);

ScoreDoc[] sd = tds.scoreDocs;

Analyzer analyzer = new IKAnalyzer();

for (int i = 0; i < sd.length; i++) {

Document hitDoc = reader.document(sd[i].doc);

list.add(createObj(hitDoc, analyzer, highlighter));

}

} catch (Exception e) {

e.printStackTrace();

}

return list;

}

/**

* 创建返回对象(高亮)

*/

private synchronized static Object createObj(Document doc, Analyzer analyzer, Highlighter highlighter) {

Gk_infoSub gk = new Gk_infoSub();

try {

if (doc != null) {

gk.setIndexno(StringUtil.null2String(doc.get("indexno")));

gk.setPdate(StringUtil.null2String(doc.get("pdate")));

String title = StringUtil.null2String(doc.get("title"));

gk.setTitle(title);

if (!"".equals(title)) {

highlighter.setTextFragmenter(new SimpleFragmenter(title.length()));

TokenStream tk = analyzer.tokenStream("title", new StringReader(title));

String htext = StringUtil.null2String(highlighter.getBestFragment(tk, title));

if (!"".equals(htext)) {

gk.setTitle(htext);

}

}

String keywords = StringUtil.null2String(doc.get("keywords"));

gk.setKeywords(keywords);

if (!"".equals(keywords)) {

highlighter.setTextFragmenter(new SimpleFragmenter(keywords.length()));

TokenStream tk = analyzer.tokenStream("keywords", new StringReader(keywords));

String htext = StringUtil.null2String(highlighter.getBestFragment(tk, keywords));

if (!"".equals(htext)) {

gk.setKeywords(htext);

}

}

String describes = StringUtil.null2String(doc.get("describes"));

gk.setDescribes(describes);

if (!"".equals(describes)) {

highlighter.setTextFragmenter(new SimpleFragmenter(describes.length()));

TokenStream tk = analyzer.tokenStream("keywords", new StringReader(describes));

String htext = StringUtil.null2String(highlighter.getBestFragment(tk, describes));

if (!"".equals(htext)) {

gk.setDescribes(htext);

}

}

}

return gk;

}

catch (Exception e) {

e.printStackTrace();

return null;

}

finally {

gk = null;

}

}

private synchronized static Object createObj(Document doc) {

Gk_infoSub gk = new Gk_infoSub();

try {

if (doc != null) {

gk.setIndexno(StringUtil.null2String(doc.get("indexno")));

gk.setPdate(StringUtil.null2String(doc.get("pdate")));

gk.setTitle(StringUtil.null2String(doc.get("title")));

gk.setKeywords(StringUtil.null2String(doc.get("keywords")));

gk.setDescribes(StringUtil.null2String(doc.get("describes")));

}

return gk;

}

catch (Exception e) {

e.printStackTrace();

return null;

}

finally {

gk = null;

}

}

}

单字段查询:

long a = System.currentTimeMillis();

try {

int curpage = StringUtil.StringToInt(StringUtil.null2String(form.get("curpage")));

int pagesize = StringUtil.StringToInt(StringUtil.null2String(form.get("pagesize")));

String title = StringUtil.replaceLuceneStr(StringUtil.null2String(form.get("title")));

LuceneQuery lu = new LuceneQuery();

form.addResult("list", lu.queryIndexTitle(title, curpage, pagesize));

form.addResult("curPage", lu.getCurrentPage());

form.addResult("pageSize", lu.getPageSize());

form.addResult("rowCount", lu.getRowCount());

form.addResult("pageCount", lu.getPages());

} catch (Exception e) {

e.printStackTrace();

}

long b = System.currentTimeMillis();

long c = b - a;

System.out.println("[搜索信息花费时间:" + c + "毫秒]");

多字段查询:

long a = System.currentTimeMillis();

try {

int curpage = StringUtil.StringToInt(StringUtil.null2String(form.get("curpage")));

int pagesize = StringUtil.StringToInt(StringUtil.null2String(form.get("pagesize")));

String allkeyword = StringUtil.replaceLuceneStr(StringUtil.null2String(form.get("allkeyword")));

String onekeyword = StringUtil.replaceLuceneStr(StringUtil.null2String(form.get("onekeyword")));

String nokeyword = StringUtil.replaceLuceneStr(StringUtil.null2String(form.get("nokeyword")));

LuceneQuery lu = new LuceneQuery();

form.addResult("list", lu.queryIndexFields(allkeyword,onekeyword,nokeyword, curpage, pagesize));

form.addResult("curPage", lu.getCurrentPage());

form.addResult("pageSize", lu.getPageSize());

form.addResult("rowCount", lu.getRowCount());

form.addResult("pageCount", lu.getPages());

} catch (Exception e) {

e.printStackTrace();

}

long b = System.currentTimeMillis();

long c = b - a;

System.out.println("[高级检索花费时间:" + c + "毫秒]");

BooleanQuery bQuery = new BooleanQuery(); //组合查询

if (!"".equals(title)) {

WildcardQuery w1 = new WildcardQuery(new Term("title", title+ "*"));

bQuery.add(w1, BooleanClause.Occur.MUST); //AND

}

int hm = start + pageSize;

TopScoreDocCollector res = TopScoreDocCollector.create(hm, false);

searcher.search(bQuery, res);

实现SQL:(unitid like ‘unitid%’ and idml like ‘id2%’) or (tounitid like ‘unitid%’ and tomlid like ‘id2%’ and tostate=1)

BooleanQuery bQuery = new BooleanQuery();

BooleanQuery b1 = new BooleanQuery();

WildcardQuery w1 = new WildcardQuery(new Term("unitid", unitid + "*"));

WildcardQuery w2 = new WildcardQuery(new Term("idml", id2 + "*"));

b1.add(w1, BooleanClause.Occur.MUST);//AND

b1.add(w2, BooleanClause.Occur.MUST);//AND

bQuery.add(b1, BooleanClause.Occur.SHOULD);//OR

BooleanQuery b2 = new BooleanQuery();

WildcardQuery w3 = new WildcardQuery(new Term("tounitid", unitid + "*"));

WildcardQuery w4 = new WildcardQuery(new Term("tomlid", id2 + "*"));

WildcardQuery w5 = new WildcardQuery(new Term("tostate", "1"));

b2.add(w3, BooleanClause.Occur.MUST);//AND

b2.add(w4, BooleanClause.Occur.MUST);//AND

b2.add(w5, BooleanClause.Occur.MUST);//AND

bQuery.add(b2, BooleanClause.Occur.SHOULD);//OR

下面这种方式不太合理,建议在创建索引库的时候排序,这样查询的时候只用分页即可,若有多个排序条件可单独创建索引库。

int hm = start + pageSize;

Sort sort = new Sort(new SortField(“pdate”, SortField.STRING, true));

TopScoreDocCollector res = TopScoreDocCollector.create(pageSize, false);

searcher.search(bQuery, res);

this.rowCount = res.getTotalHits();

this.pages = (rowCount – 1) / pageSize + 1; //计算总页数

TopDocs tds =searcher.search(bQuery,rowCount,sort);// res.topDocs(start, pageSize);

ScoreDoc[] sd = tds.scoreDocs;

System.out.println(“rowCount:” + rowCount);

int i=0;

for (ScoreDoc scoreDoc : sd) {

i++;

if(i<start){

continue;

}

if(i>hm){

break;

}

Document doc = searcher.doc(scoreDoc.doc);

list.add(createObj(doc));

}

int hm = start + pageSize;

Sort sort = new Sort();

SortField sortField = new SortField("pdate", SortField.STRING, true);

sort.setSort(sortField);

TopDocs hits = searcher.search(bQuery, null, hm, sort);

this.rowCount = hits.totalHits;

this.pages = (rowCount - 1) / pageSize + 1; //计算总页数

for (int i = start; i < hits.scoreDocs.length; i++) {

ScoreDoc sdoc = hits.scoreDocs[i];

Document doc = searcher.doc(sdoc.doc);

list.add(createObj(doc));

}

ps: 周一完成创建索引库定时任务,周二实现模糊查询中文分词高亮显示及分页,今天实现了通配符查询、嵌套查询、先排序后分页,从零接触到实现Lucene主要功能花了三天时间,当然,性能如何还待测试和优化。

select to_char(sysdate,'YYYY/MM/DD') FROM DUAL; -- 2007/09/20 select to_char(sysdate,'YYYY') FROM DUAL; -- 2007 select to_char(sysdate,'YYY') FROM DUAL; -- 007 select to_char(sysdate,'YY') FROM DUAL; -- 07 select to_char(sysdate,'MM') FROM DUAL; -- 09 select to_char(sysdate,'DD') FROM DUAL; -- 20 select to_char(sysdate,'D') FROM DUAL; -- 5 select to_char(sysdate,'DDD') FROM DUAL; -- 263 select to_char(sysdate,'WW') FROM DUAL; -- 38 select to_char(sysdate,'W') FROM DUAL; -- 3 select to_char(sysdate,'YYYY/MM/DD HH24:MI:SS') FROM DUAL; -- 2007/09/20 15:24:13 select to_char(sysdate,'YYYY/MM/DD HH:MI:SS') FROM DUAL; -- 2007/09/20 03:25:23 select to_char(sysdate,'J') FROM DUAL; -- 2454364 select to_char(sysdate,'RR/MM/DD') FROM DUAL; -- 07/09/20

function sel(obj){

var id=obj.value;

var qx=document.getElementsByName("id");

for(var i = 0; i id.length&&v.startWith(id)){

if(obj.checked){

qx[i].checked=true;

} else{

qx[i].checked=false;

}

}

if(v!=""&&v.length<id.length&&id.startWith(v)){

if(obj.checked){

} else{

qx[i].checked=false;

}

}

}

}

0001 00010001 00010002 00010003

慈禧借口建水师学堂,挪用海军经费修颐和园。大臣问:昆明湖里建海军,练成之后军舰怎么开出去呢?慈禧:我请大仙算过,海军练成之日会天降百年一遇的暴雨,京城会变泽国,到时海军便可从昆明湖发兵直取东瀛。大臣担心雨下不来,慈禧说:大仙说了,只要是5千年一遇的朝廷,雨肯定下。结果,那年雨没下~